วันจันทร์ที่ 9 กันยายน พ.ศ. 2556

วันจันทร์ที่ 2 กันยายน พ.ศ. 2556

Data management

Data management

From Wikipedia, the free encyclopedia

Overview[edit source | editbeta]

The official definition provided by DAMA International, the professional organization for those in the data management profession, is: "Data Resource Management is the development and execution of architectures, policies, practices and procedures that properly manage the full data lifecycle needs of an enterprise." {{DAMA International}} This definition is fairly broad and encompasses a number of professions which may not have direct technical contact with lower-level aspects of data management, such as relational database management.Alternatively, the definition provided in the DAMA Data Management Body of Knowledge (DAMA-DMBOK) is: "Data management is the development, execution and supervision of plans, policies, programs and practices that control, protect, deliver and enhance the value of data and information assets."[1]

The concept of "Data Management" arose in the 1980s as technology moved from sequential processing (first cards, then tape) to random access processing. Since it was now technically possible to store a single fact in a single place and access that using random access disk, those suggesting that "Data Management" was more important than "Process Management" used arguments such as "a customer's home address is stored in 75 (or some other large number) places in our computer systems." During this period, random access processing was not competitively fast, so those suggesting "Process Management" was more important than "Data Management" used batch processing time as their primary argument. As applications moved more and more into real-time, interactive applications, it became obvious to most practitioners that both management processes were important. If the data was not well defined, the data would be mis-used in applications. If the process wasn't well defined, it was impossible to meet user needs.

Corporate Data Quality Management[edit source | editbeta]

Corporate Data Quality Management (CDQM) is, according to the European Foundation for Quality Management and the Competence Center Corporate Data Quality (CC CDQ, University of St. Gallen), the whole set of activities intended to improve corporate data quality (both reactive and preventive). Main premise of CDQM is the business relevance of high-quality corporate data. CDQM comprises with following activity areas:[2]- Strategy for Corporate Data Quality: As CDQM is affected by various business drivers and requires involvement of multiple divisions in an organization; it must be considered a company-wide endeavor.

- Corporate Data Quality Controlling: Effective CDQM requires compliance with standards, policies, and procedures. Compliance is monitored according to previously defined metrics and performance indicators and reported to stakeholders.

- Corporate Data Quality Organization: CDQM requires clear roles and responsibilities for the use of corporate data. The CDQM organization defines tasks and privileges for decision making for CDQM.

- Corporate Data Quality Processes and Methods: In order to handle corporate data properly and in a standardized way across the entire organization and to ensure corporate data quality, standard procedures and guidelines must be embedded in company’s daily processes.

- Data Architecture for Corporate Data Quality: The data architecture consists of the data object model - which comprises the unambiguous definition and the conceptual model of corporate data - and the data storage and distribution architecture.

- Applications for Corporate Data Quality: Software applications support the activities of Corporate Data Quality Management. Their use must be planned, monitored, managed and continuously improved.

Data integrity

Data integrity

From Wikipedia, the free encyclopedia

In computing, data integrity refers to maintaining and assuring the accuracy and consistency of data over its entire life-cycle,[1] and is an important feature of a database or RDBMS system. Data integrity means that the data contained in the database is accurate and reliable. Data warehousing and business intelligence in general demand the accuracy, validity and correctness of data despite hardware failures, software bugs or human error. Data that has integrity is identically maintained during any operation, such as transfer, storage or retrieval.

All characteristics of data, including business rules, rules for how pieces of data relate, dates, definitions and lineage must be correct for its data integrity to be complete. When functions operate on the data, the functions must ensure integrity. Examples include transforming the d

Data integrity also includes rules defining the relations a piece of data can have, to other pieces of data, such as a Customer record being allowed to link to purchased Products, but not to unrelated data such as Corporate Assets. Data integrity often includes checks and correction for invalid data, based on a fixed schema or a predefined set of rules. An example being textual data entered where a date-time value is required. Rules for data derivation are also applicable, specifying how a data value is derived based on algorithm, contributors and conditions. It also specifies the conditions on how the data value could be re-derived.

Having a single, well-controlled, and well-defined data-integrity system increases

ata, storing history and storing metadata.

All characteristics of data, including business rules, rules for how pieces of data relate, dates, definitions and lineage must be correct for its data integrity to be complete. When functions operate on the data, the functions must ensure integrity. Examples include transforming the d

Databases[edit source | editbeta]

Data integrity contains guidelines for data retention, specifying or guaranteeing the length of time of data can be retained in a particular database. It specifies what can be done with data values when its validity or usefulness expires. In order to achieve data integrity, these rules are consistently and routinely applied to all data entering the system, and any relaxation of enforcement could cause errors in the data. Implementing checks on the data as close as possible to the source of input (such as human data entry), causes less erroneous data to enter the system. Strict enforcement of data integrity rules causes the error rates to be lower, resulting in time saved troubleshooting and tracing erroneous data and the errors it causes algorithms.Data integrity also includes rules defining the relations a piece of data can have, to other pieces of data, such as a Customer record being allowed to link to purchased Products, but not to unrelated data such as Corporate Assets. Data integrity often includes checks and correction for invalid data, based on a fixed schema or a predefined set of rules. An example being textual data entered where a date-time value is required. Rules for data derivation are also applicable, specifying how a data value is derived based on algorithm, contributors and conditions. It also specifies the conditions on how the data value could be re-derived.

Types of integrity constraints[edit source | editbeta]

Data integrity is normally enforced in a database system by a series of integrity constraints or rules. Three types of integrity constraints are an inherent part of the relational data model: entity integrity, referential integrity and domain integrity:- Entity integrity concerns the concept of a primary key. Entity integrity is an integrity rule which states that every table must have a primary key and that the column or columns chosen to be the primary key should be unique and not null.

- Referential integrity concerns the concept of a foreign key. The referential integrity rule states that any foreign-key value can only be in one of two states. The usual state of affairs is that the foreign key value refers to a primary key value of some table in the database. Occasionally, and this will depend on the rules of the data owner, a foreign-key value can be null. In this case we are explicitly saying that either there is no relationship between the objects represented in the database or that this relationship is unknown.

- Domain integrity specifies that all columns in relational database must be declared upon a defined domain. The primary unit of data in the relational data model is the data item. Such data items are said to be non-decomposable or atomic. A domain is a set of values of the same type. Domains are therefore pools of values from which actual values appearing in the columns of a table are drawn.

Having a single, well-controlled, and well-defined data-integrity system increases

- stability (one centralized system performs all data integrity operations)

- performance (all data integrity operations are performed in the same tier as the consistency model)

- re-usability (all applications benefit from a single centralized data integrity system)

- maintainability (one centralized system for all data integrity administration).

Examples[edit source | editbeta]

An example of a data-integrity mechanism is the parent-and-child relationship of related records. If a parent record owns one or more related child records all of the referential integrity processes are handled by the database itself, which automatically insures the accuracy and integrity of the data so that no child record can exist without a parent (also called being orphaned) and that no parent loses their child records. It also ensures that no parent record can be deleted while the parent record owns any child records. All of this is handled at the database level and does not require coding integrity checks into each applications.File Systems[edit source | editbeta]

Research shows that neither currently widespread File systems — such as UFS, Ext, XFS, JFS, NTFS — nor Hardware RAID solutions provide sufficient protection against data integrity problems.[2][3][4][5][6] ZFS addresses these issues and research further indicates that ZFS protects data better than earlier solutions.[7]ata, storing history and storing metadata.

Datasheet

Datasheet

From Wikipedia, the free encyclopedia

Jump to: navigation, search

A datasheet, data sheet, or spec sheet is a document summarizing the performance and other technical characteristics of a product, machine, component (e.g. an electronic component), material, a subsystem (e.g. a power supply) or software in sufficient detail to be used by a design engineer to integrate the component into a system. Typically, a datasheet is created by the component/subsystem/software manufacturer and begins with an introductory page describing the rest of the document, followed by listings of specific characteristics, with further information on the connectivity of the devices. In cases where there is relevant source code to include, it is usually attached near the end of the document or separated into another file.

Depending on the specific purpose, a data sheet may offer an average value, a typical value, a typical range, engineering tolerances,or a nominal value. The type and source of data are usually stated on the data sheet.

A data sheet is usually used for technical communication to describe technical characteristics of an item or product. It can be published by the manufacturer to help people choose products or to help use the products. By contrast, a technical specification is an explicit set of requirements to be satisfied by a material, product, or service.

An electronic datasheet specifies characteristics in a formal structure that allows the information to be processed by a machine. Such machine readable descriptions can facilitate information retrieval, display, design, testing, interfacing, verification, and system discovery. Examples include transducer electronic data sheets for describing sensor characteristics, and Electronic device descriptions in CANopen or descriptions in markup languages, such as SensorML.

Depending on the specific purpose, a data sheet may offer an average value, a typical value, a typical range, engineering tolerances,or a nominal value. The type and source of data are usually stated on the data sheet.

A data sheet is usually used for technical communication to describe technical characteristics of an item or product. It can be published by the manufacturer to help people choose products or to help use the products. By contrast, a technical specification is an explicit set of requirements to be satisfied by a material, product, or service.

An electronic datasheet specifies characteristics in a formal structure that allows the information to be processed by a machine. Such machine readable descriptions can facilitate information retrieval, display, design, testing, interfacing, verification, and system discovery. Examples include transducer electronic data sheets for describing sensor characteristics, and Electronic device descriptions in CANopen or descriptions in markup languages, such as SensorML.

Datacable

Data cable

From Wikipedia, the free encyclopedia

Jump to: navigation, search

| This article does not cite any references or sources. Please help improve this article by adding citations to reliable sources. Unsourced material may be challenged and removed. (March 2013) |

Examples Are:

- Networking Media

- Ethernet Cables (Cat5, Cat5e, Cat6, Cat6a)

- Token Ring Cables (Cat4)

- Coaxial cable is sometimes used as a baseband digital data cable, such as in serial digital interface and thicknet and thinnet.

- optical fiber cable; see fiber-optic communication

- serial cable

- Telecommunications Cable (Cat2 or telephone cord)

What is data???

Data

From Wikipedia, the free encyclopedia

Jump to: navigation, search

For data in computer science, see Data (computing). For other uses, see Data (disambiguation).

Data (/ˈdeɪtə/ DAY-tə, /ˈdætə/ DA-tə, or /ˈdɑːtə/ DAH-tə) are values of qualitative or quantitative variables, belonging to a set of items. Data in computing (or data processing) are represented in a structure, often tabular (represented by rows and columns), a tree (a set of nodes with parent-children relationship) or a graph structure (a set of interconnected nodes). Data are typically the results of measurements and can be visualised using graphs or images. Data as an abstract concept can be viewed as the lowest level of abstraction from which information and then knowledge are derived. Raw data, i.e., unprocessed data, refers to a collection of numbers, characters and is a relative term; data processing commonly occurs by stages, and the "processed data" from one stage may be considered the "raw data" of the next. Field data refers to raw data collected in an uncontrolled in situ environment. Experimental data refers to data generated within the context of a scientific investigation by observation and recording.The word data is the plural of datum, neuter past participle of the Latin dare, "to give", hence "something given". In discussions of problems in geometry, mathematics, engineering, and so on, the terms givens and data are used interchangeably. Such usage is the origin of data as a concept in computer science or data processing: data are numbers, words, images, etc., accepted as they stand.

Though data is also increasingly used in humanities (particularly in the growing digital humanities), it has been suggested that the highly interpretive nature of humanities might be at odds with the ethos of data as "given". Peter Checkland introduced the term capta (from the Latin capere, “to take”) to distinguish between an immense number of possible data and a sub-set of them, to which attention is oriented.[1] Johanna Drucker has argued that since the humanities affirm knowledge production as “situated, partial, and constitutive,” using data may introduce assumptions that are counterproductive, for example that phenomena are discrete or are observer-independent.[2] The term capta, which emphasizes the act of observation as constitutive, is offered as an alternative to data for visual representations in the humanities.

วันจันทร์ที่ 26 สิงหาคม พ.ศ. 2556

Data VS Programs

Data vs programs

Fundamentally, computers follow the instructions they are given. A set of instructions to perform a given task (or tasks) is called a "program". In the nominal case, the program, as executed by the computer, will consist of binary machine code. The elements of storage manipulated by the program, but not actually executed by the CPU, contain data.Typically, programs are stored in special file types, different from that used for data. Executable files contain programs; all other files are data files. However, executable files may also contain data which is "built-in" to the program. In particular, some executable files have a data segment, which nominally contains constants and initial values (both data).

For example: a user might first instruct the operating system to load a word processor program from one file, and then edit a document stored in another file. In this example, the document would be considered data. If the word processor also features a spell checker, then the dictionary (word list) for the spell checker would also be considered data. The algorithms used by the spell checker to suggest corrections would be either machine code or a code in some interpretable programming language.

The line between program and data can become blurry. An interpreter, for example, is a program. The input data to an interpreter is itself a program—just not one expressed in native machine language. In many cases, the interpreted program will be a human-readable text file, which is manipulated with a text editor—more normally associated with plain text data. Metaprogramming similarly involves programs manipulating other programs as data. Also, for programs like compilers, linkers, debuggers, program updaters, etc. may other programs serve as data.

Data structure

Data structure

From Wikipedia, the free encyclopedia

Jump to: navigation, search

In computer science, a data structure is a particular way of storing and organizing data in a computer so that it can be used efficiently.[1][2]

Different kinds of data structures are suited to different kinds of applications, and some are highly specialized to specific tasks. For example, B-trees are particularly well-suited for implementation of databases, while compiler implementations usually use hash tables to look up identifiers.

Data structures provide a means to manage large amounts of data efficiently, such as large databases and internet indexing services. Usually, efficient data structures are a key to designing efficient algorithms. Some formal design methods and programming languages emphasize data structures, rather than algorithms, as the key organizing factor in software design. Storing and retrieving can be carried out on data stored in both main memory and in secondary memory.

The implementation of a data structure usually requires writing a set of procedures that create and manipulate instances of that structure. The efficiency of a data structure cannot be analyzed separately from those operations. This observation motivates the theoretical concept of an abstract data type, a data structure that is defined indirectly by the operations that may be performed on it, and the mathematical properties of those operations (including their space and time cost).

Most programming languages feature some sort of library mechanism that allows data structure implementations to be reused by different programs. Modern languages usually come with standard libraries that implement the most common data structures. Examples are the C++ Standard Template Library, the Java Collections Framework, and Microsoft's .NET Framework.

Modern languages also generally support modular programming, the separation between the interface of a library module and its implementation. Some provide opaque data types that allow clients to hide implementation details. Object-oriented programming languages, such as C++, Java and Smalltalk may use classes for this purpose.

Many known data structures have concurrent versions that allow multiple computing threads to access the data structure simultaneously.

Different kinds of data structures are suited to different kinds of applications, and some are highly specialized to specific tasks. For example, B-trees are particularly well-suited for implementation of databases, while compiler implementations usually use hash tables to look up identifiers.

Data structures provide a means to manage large amounts of data efficiently, such as large databases and internet indexing services. Usually, efficient data structures are a key to designing efficient algorithms. Some formal design methods and programming languages emphasize data structures, rather than algorithms, as the key organizing factor in software design. Storing and retrieving can be carried out on data stored in both main memory and in secondary memory.

Overview[edit source | editbeta]

- An array data structure stores a number of elements in a specific order. They are accessed using an integer to specify which element is required (although the elements may be of almost any type). Arrays may be fixed-length or expandable.

- Record (also called tuple or struct) Records are among the simplest data structures. A record is a value that contains other values, typically in fixed number and sequence and typically indexed by names. The elements of records are usually called fields or members.

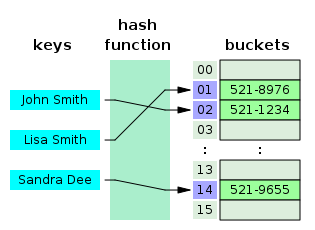

- A hash or dictionary or map is a more flexible variation on a record, in which name-value pairs can be added and deleted freely.

- Union. A union type definition will specify which of a number of permitted primitive types may be stored in its instances, e.g. "float or long integer". Contrast with a record, which could be defined to contain a float and an integer; whereas, in a union, there is only one value at a time.

- A tagged union (also called a variant, variant record, discriminated union, or disjoint union) contains an additional field indicating its current type, for enhanced type safety.

- A set is an abstract data structure that can store specific values, without any particular order, and no repeated values. Values themselves are not retrieved from sets, rather one tests a value for membership to obtain a boolean "in" or "not in".

- An object contains a number of data fields, like a record, and also a number of program code fragments for accessing or modifying them. Data structures not containing code, like those above, are called plain old data structure.

Basic principles[edit source | editbeta]

Data structures are generally based on the ability of a computer to fetch and store data at any place in its memory, specified by an address—a bit string that can be itself stored in memory and manipulated by the program. Thus the record and array data structures are based on computing the addresses of data items with arithmetic operations; while the linked data structures are based on storing addresses of data items within the structure itself. Many data structures use both principles, sometimes combined in non-trivial ways (as in XOR linking).The implementation of a data structure usually requires writing a set of procedures that create and manipulate instances of that structure. The efficiency of a data structure cannot be analyzed separately from those operations. This observation motivates the theoretical concept of an abstract data type, a data structure that is defined indirectly by the operations that may be performed on it, and the mathematical properties of those operations (including their space and time cost).

Language support[edit source | editbeta]

Most assembly languages and some low-level languages, such as BCPL (Basic Combined Programming Language), lack support for data structures. Many high-level programming languages and some higher-level assembly languages, such as MASM, on the other hand, have special syntax or other built-in support for certain data structures, such as vectors (one-dimensional arrays) in the C language or multi-dimensional arrays in Pascal.Most programming languages feature some sort of library mechanism that allows data structure implementations to be reused by different programs. Modern languages usually come with standard libraries that implement the most common data structures. Examples are the C++ Standard Template Library, the Java Collections Framework, and Microsoft's .NET Framework.

Modern languages also generally support modular programming, the separation between the interface of a library module and its implementation. Some provide opaque data types that allow clients to hide implementation details. Object-oriented programming languages, such as C++, Java and Smalltalk may use classes for this purpose.

Many known data structures have concurrent versions that allow multiple computing threads to access the data structure simultaneously.

INFORMATION TECHNOLOGY

Information technology

From Wikipedia, the free encyclopedia

Jump to: navigation, search

| Information science |

|---|

| General aspects |

| Related fields and sub-fields |

In a business context, the Information Technology Association of America has defined information technology as "the study, design, development, application, implementation, support or management of computer-based information systems".[5] The responsibilities of those working in the field include network administration, software development and installation, and the planning and management of an organization's technology life cycle, by which hardware and software is maintained, upgraded and replaced.

Humans have been storing, retrieving, manipulating and communicating information since the Sumerians in Mesopotamia developed writing in about 3000 BC,[6] but the term "information technology" in its modern sense first appeared in a 1958 article published in the Harvard Business Review; authors Harold J. Leavitt and Thomas L. Whisler commented that "the new technology does not yet have a single established name. We shall call it information technology (IT)."[7] Based on the storage and processing technologies employed, it is possible to distinguish four distinct phases of IT development: pre-mechanical (3000 BC – 1450 AD), mechanical (1450–1840), electromechanical (1840–1940) and electronic (1940–present).[6] This article focuses on the most recent period (electronic), which began in about 1940

Homenetwork

Home network

From Wikipedia, the free encyclopedia

Jump to: navigation, search

A home network or home area network (HAN) is a type of local area network that develops from the need to facilitate communication and interoperability among digital devices present inside or within the close vicinity of a home. Devices capable of participating in this network–smart devices such as network printers and handheld mobile computers–often gain enhanced emergent capabilities through their ability to interact. These additional capabilities can then be used to increase the quality of life inside the home in a variety of ways, such as automation of repetitious tasks, increased personal productivity, enhanced home security, and easier access to entertainment

Recently however ISPs have starting installing Gateway/Router/Wi-Fi combination devices for new customers which reduces the required steps needed to simply setting the password.

A wireless network can be used for communication between many electronic devices, to connect to the Internet or to wired networks that use Ethernet technology. Wi-Fi is a marketing and compliance certification for IEEE 802.11 technologies.[1] The WiFi Alliance has tested compliant products certifies them for interoperability.

Causes[edit source | editbeta]

One of the main factors that has historically led to the establishment of a HAN is the out-of-box inability to share residential Internet access among all internet capable devices in the home. Due to the effect of IPv4 address exhaustion, most Internet Service Providers provide only one WAN-facing IP address for each residential subscription. Therefore most homes require some sort of device that acts as a liaison capable of network address translation (NAT) of packets travelling across the WAN-HAN boundary. Even while the router's role can be performed by any commodity Personal Computer with an array of Network Interface Cards, most new HAN administrators still choose to utilize a particular class of small, passively-cooled, table-top devices which also provide the wireless access point functionality necessary to access the HAN via Wi-Fi–a virtual necessity for the multitude of wireless mobile-optimized devices focused around internet content consumption. The kinds of routers marketed towards HAN administrators attempt to absorb as many duties as possible from other network infrastructure devices while at the same time striving to make any configuration as automated, user friendly, and "plug-and-play" as possible.Recently however ISPs have starting installing Gateway/Router/Wi-Fi combination devices for new customers which reduces the required steps needed to simply setting the password.

Transmission Media[edit source | editbeta]

Home networks may use wired or wireless technologies. Wired systems typically use shielded or unshielded twisted pair cabling, such as any of the Category 3 (CAT3) through Category 6 (CAT6) classes, but may also be implemented with coaxial cable, or over the existing electrical power wiring within homes.Wireless radio[edit source | editbeta]

One of the most common ways of creating a home network is by using wireless radio signal technology; the 802.11 network as certified by the IEEE. Most products that are wireless-capable operate at a frequency of 2.4 GHz under 802.11b and 802.11g or 5 GHz under 802.11a. Some home networking devices operate in both radio-band signals and fall within the standard 802.11n.A wireless network can be used for communication between many electronic devices, to connect to the Internet or to wired networks that use Ethernet technology. Wi-Fi is a marketing and compliance certification for IEEE 802.11 technologies.[1] The WiFi Alliance has tested compliant products certifies them for interoperability.

Existing home wiring[edit source | editbeta]

As an alternative to wireless networking, the existing home wiring (coax in North America, telephone wiring in multi dwelling units (MDU) and power-line in Europe and USA) can be used as a network medium. With the installation of a home networking device, the network can be accessed by simply plugging the Computer into a wall socket.Power lines[edit source | editbeta]

Main article: Power line communication

The ITU-T G.hn and IEEE Powerline standard, which provide high-speed (up to 1 Gbit/s) local area networking over existing home wiring, are examples of home networking technology designed specifically for IPTV delivery. Recently, the IEEE passed proposal P1901 which grounded a standard within the Market for wireline products produced and sold by companies that are part of the HomePlug Alliance.[2] The IEEE is continuously working to push for P1901 to be completely recognized worldwide as the sole standard for all future products that are produced for Home Networking.Telephone wires[edit source | editbeta]

Data google

Data Compression Proxy

Faster, safer, and cheaper mobile web browsing with data compression

The latest Chrome browsers for Android and iOS can reduce cellular data usage and speed up mobile web browsing by using proxy servers hosted at Google to optimize website content. In our internal testing, this feature has been shown to reduce data usage by 50% and speed up page load times on cellular networks! To enable it, visit "Settings > Bandwidth Management > Reduce data usage" and toggle the option – easy as that.Note: The data compression feature is currently available to a subset of Android and iOS users - if you don't see the option, stay tuned, as we are rolling out the feature over the coming months. If you can't wait, you can install Chrome Beta for Android and enable the data compression feature right away.

Features and implementation

The core optimizations, which allow us to reduce overall data usage and speed up the page load times, are performed by Google servers. When the Data Compression Proxy feature is enabled, Chrome Mobile opens a dedicated SPDY connection between your phone and one of the optimization servers running in Google’s datacenters and relays all HTTP requests over this connection.Data facebook

Software Engineering

Data Scientist

Facebook is seeking a Data Scientist to join our Data Science team. Individuals in this role are expected to be comfortable working as a software engineer and a quantitative researcher. The ideal candidate will have a keen interest in the study of an online social network, and a passion for identifying and answering questions that help us build the best products.

Responsibilities

- Work closely with a product engineering team to identify and answer important product questions

- Answer product questions by using appropriate statistical techniques on available data

- Communicate findings to product managers and engineers

- Drive the collection of new data and the refinement of existing data sources

- Analyze and interpret the results of product experiments

- Develop best practices for instrumentation and experimentation and communicate those to product engineering teams

Requirements

- M.S. or Ph.D. in a relevant technical field, or 4+ years experience in a relevant role

- Extensive experience solving analytical problems using quantitative approaches

- Comfort manipulating and analyzing complex, high-volume, high-dimensionality data from varying sources

- A strong passion for empirical research and for answering hard questions with data

- A flexible analytic approach that allows for results at varying levels of precision

- Ability to communicate complex quantitative analysis in a clear, precise, and actionable manner

- Fluency with at least one scripting language such as Python or PHP

- Familiarity with relational databases and SQL

- Expert knowledge of an analysis tool such as R, Matlab, or SAS

- Experience working with large data sets, experience working with distributed computing tools a plus (Map/Reduce, Hadoop, Hive, etc.)

สมัครสมาชิก:

บทความ (Atom)